Our latest UK Learning Analytics Network meeting was kindly hosted by the University of Edinburgh. Sessions varied from details of the range of innovations Edinburgh is involved with, to using assessment data, to student wellbeing and mental health, to current developments with Civitas Learning’s products and Jisc’s learning analytics service.

The hashtag was #jisclan if you want to check the tweets. Video recordings are available for:

The day was introduced by Sian Bayne, Professor of Digital Education & Assistant Principal for Digital Education at Edinburgh. One of the things she discussed was the University’s agreed principles for learning analytics, which are worth repeating:

As an institution we understand that data never provides the whole picture about students’ capacities or likelihood of success, and it will therefore not be used to inform significant action at an individual level without human intervention;

As an institution we understand that data never provides the whole picture about students’ capacities or likelihood of success, and it will therefore not be used to inform significant action at an individual level without human intervention;- Our vision is that learning analytics can benefit all students in reaching their full academic potential. While we recognise that some of the insights from learning analytics may be directed more at some students than others, we do not propose a deficit model targeted only at supporting students at risk of failure;

- We will be transparent about how we collect and use data, with whom we share it, where consent applies, and where responsibilities for the ethical use of data lie;

- We recognise that data and algorithms can contain and perpetuate bias, and will actively work to recognise and minimise any potential negative impacts;

- Good governance will be core to our approach, to ensure learning analytics projects and implementations are conducted according to defined ethical principles and align with organisational strategy, policy and values;

- The introduction of learning analytics systems will be supported by focused staff and student development activities to build our institutional capacity;

- Data generated from learning analytics will not be used to monitor staff performance, unless specifically authorised following additional consultation.

The separate purposes for learning analytics defined by Edinburgh include: quality, equity, personalised feedback, coping with scale, student experience, skills and efficiency.

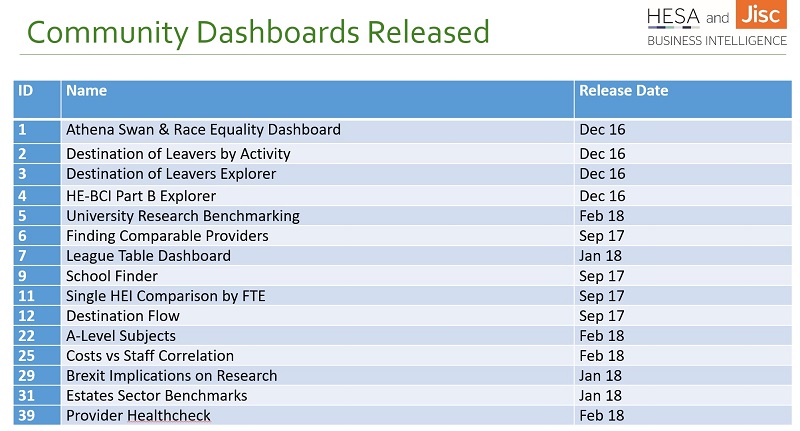

Next we had an update on Jisc’s data and visualisation services from Michael Webb, Rob Wyn Jones and Lee Baylis (ppt 5.6MB).

Working with the Higher Education Statistics Agency (HESA), Jisc has developed a business intelligence shared service for education, which includes analytics labs and community dashboards. These tools allow institutions to review data comparatively from quality sources – and compare themselves with peer organisations.

Our final session before lunch was a workshop on learning analytics and student mental health. Sam Ahern from University College London kicked this off with a presentation (pdf 1.1MB). Sam had examined data relating to Moodle accesses, library and laptop loans, together with student records – and showed details of some of her findings. With a particular interest in student wellbeing, her proposed research questions for institutions with an interest in analytics and mental health are:

- What current wellbeing or personal tutoring policies are in place?

- What data, if any, is supposed to support these

- What are the data flows

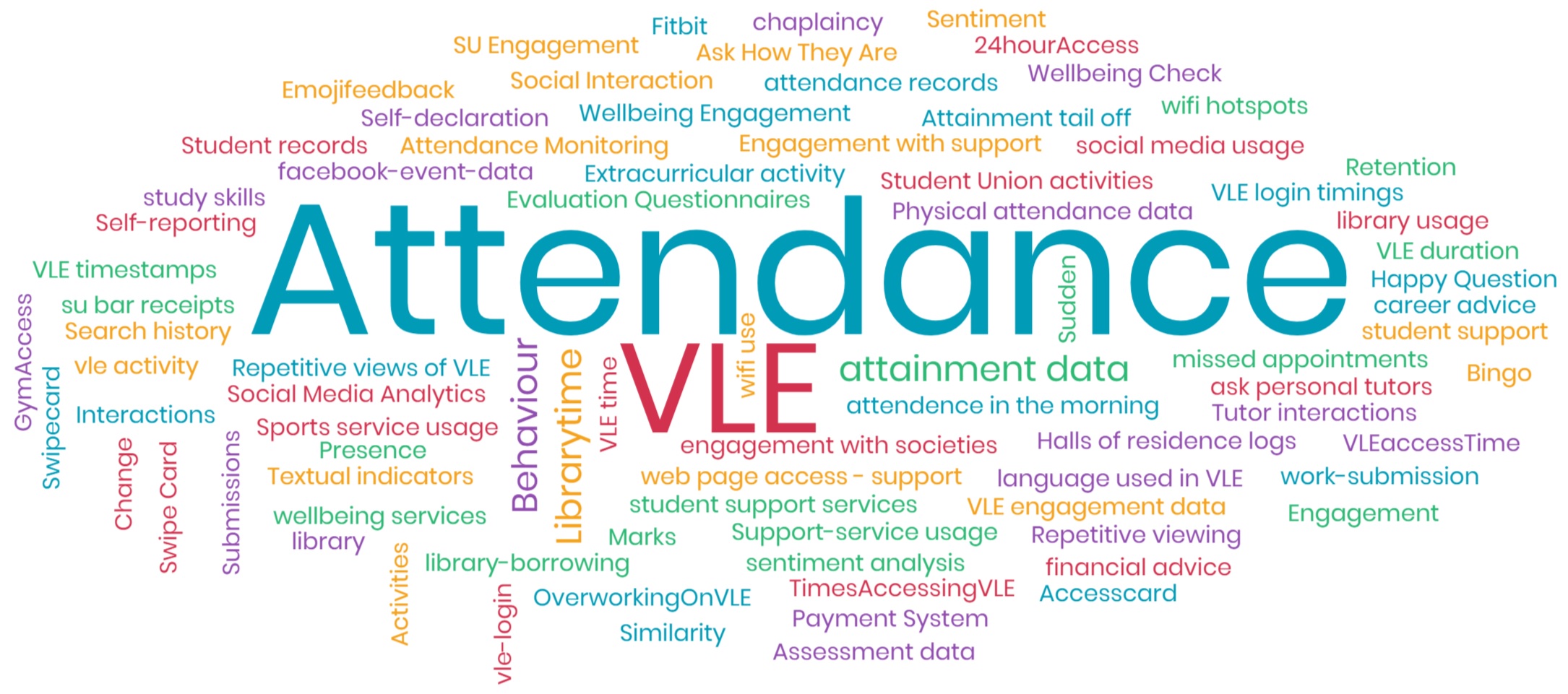

We then opened things out to the audience and asked them to discuss the question:

What data sources would be most helpful for supporting student wellbeing?

The results, below, show that attendance and VLE usage were thought to be the main potential sources. Nothing to do, I’m sure, with the fact I gave these as examples just before asking the question… However there’s a rich collection of other suggestions here which I shall be poring over in greater detail as we develop our thinking in this area with colleagues.

We then asked the group what challenges or issues they thought would be faced when gathering and using this data. Responses included:

- Resource: do we have the resource to support students using this data? To what extent do we want to use data and algorithms as a sticking plaster for lack of academic resource?

- How comfortable are students will students be with the data collected, the way it’s shared etc?

- Will some students game the data? Some of them will not be thinking or acting rationally.

- What’s the legality of sharing the data?

- The involvement of a health professional would help

- There’s a balance between duty of care and culpability

- We could be generating sensitive data

- Whose responsibility is it to follow up? Everyone’s? We need coherent processes and should augment those already in place rather than starting from scratch.

- Damage could be caused by poor quality data in dashboards

- Fear of acting. Around 1/3rd of institutions do not have a wellbeing strategy in place.

- Getting permission to access social media data would be helpful but is logistically difficult

Finally we asked the question:

Should learning analytics be used to support student wellbeing?

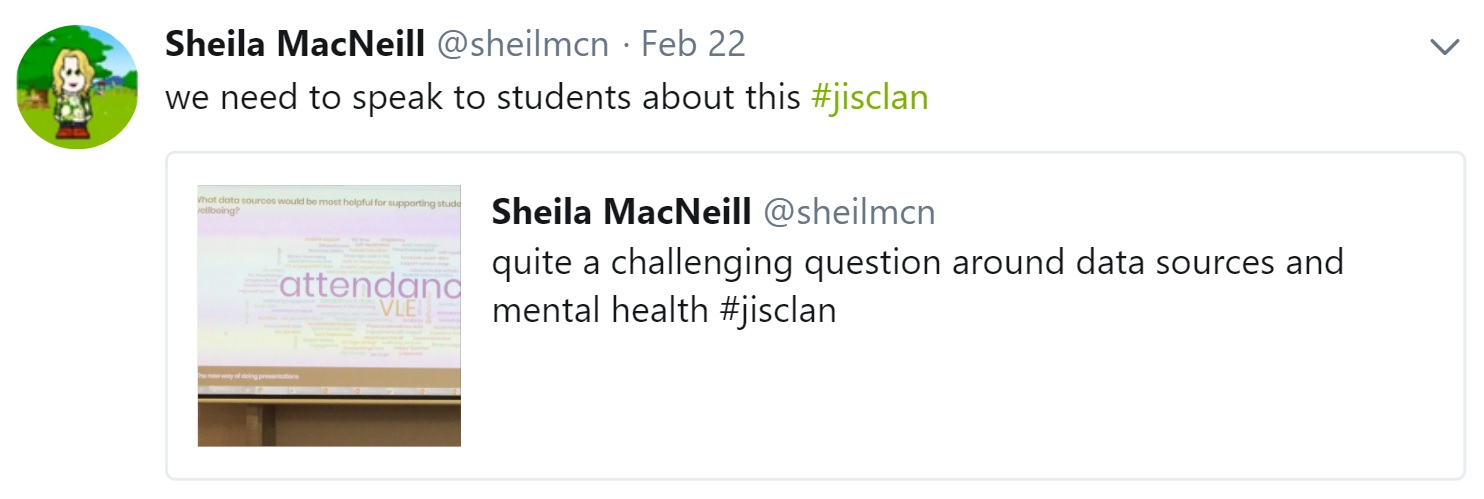

We received 30 responses to this question. 25 said yes, 5 said no. Admittedly this is a self-selected group of people who are likely to be positive about this. My question is: how can we not at least explore the potential of using this data for helping the very many students with mental health issues – and potentially even to save lives? And as Sheila MacNeill points out, though, it’s not just staff who should be consulted about this:

After lunch it was the turn of Anne-Marie Scott & Yi-Shan Tsai, with Anne-Marie telling us about the wealth of learning analytics projects at Edinburgh, and Yi-Shan talking in-depth about the Supporting Higher Education to Integrate Learning Analytics (SHEILA) project (ppt 10.3MB).

Anne-Marie discussed the analytics carried out on some of the University’s MOOCs. Almost all participants were studying them “to learn new things”. Many were doing so for career purposes too though this varied depending on their age. She showed various other interesting visualisations of the MOOC data. They’d found that the data required a lot of cleaning before analysis.

Using Civitas Learning’s Illume product, Edinburgh had also carried out predictive analytics on students’ likelihood of success.

Yi-Shan then discussed the SHEILA project which is building a policy framework for learning analytics, looking at adoption challenges, institutional strategy and policy, and the interests and concerns of stakeholders.

The next session was from Michael Webb, Jisc’s director of technology and analytics, and Kerr Gardner, who is working with Jisc and Turnitin to look at the use of assessment data for learning analytics. In this interactive session, the group fed back some of their suggested requirements for the data which would be of most use to come back to them from Turnitin. Stay tuned to this blog for updates on Kerr’s progress in this area.

Finally we had an interesting presentation from Mara Richard and Chris Greenough of Civitas Learning (pdf 9MB). They explained how their predictive modelling system works and suggested that there are big differences between the UK universities they’d worked with. They looked particularly at modules attempted versus modules earned to help understand student burn-out and resiliency at different institutions.

Mara suggested developing “mindset principles” such as belonging, normalising, goal setting and empathy to help students persist, developing nudges to encourage certain behaviours. She also gave out a call to institutions who wish to work with Civitas’s data platform and predictive modelling in an integrated way with Jisc’s learning data hub.

Our next meeting is likely to be in May somewhere in England North of Watford Gap. Details will be announced on this blog and via the analytics@jiscmail list.