Last week Paul Bailey and I braved the sweltering heat which had briefly engulfed Birmingham and most of the rest of England to meet with staff and students at Aston University. 30 of us, including representatives from three other universities, spent the day thinking about how institutions can best make use of the intelligence gained from data collected about students and their activities.

We concentrated on two of the main applications of learning analytics:

- Intervening with individual students who are flagged as being at risk of withdrawal or poor academic performance

- Enhancing the curriculum on the basis of data in areas such as the uptake of learning content or the effectiveness of learning activities (which I’ll cover in a later blog post)

In the morning, each of the five groups which were formed chose a scenario and thought about the most appropriate interventions. Here is one scenario which was selected by two of the groups:

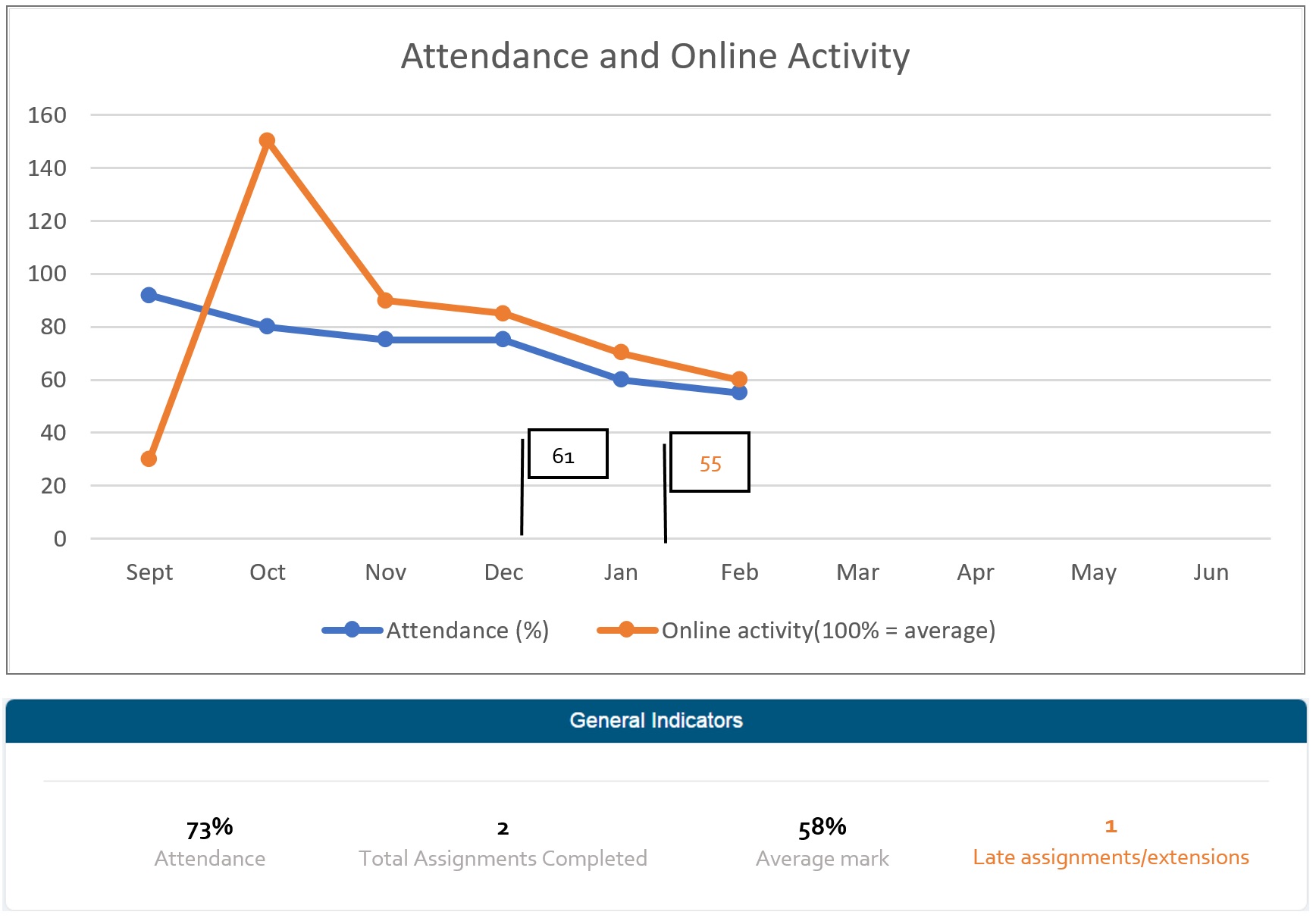

Student Data Example 4: Business student with decreasing attendance and online activity

Student: Fredrik Bell

Programme of Study/Module: Year 1 Business Computing and IT

Background: The data provides a view of attendance vs online activity from the start of the course for a BUS101 Module. Fredrick has a low average mark, has asked 1 request for an extensions deadline. Attendance has been dropping and VLE use following a peak in October has dropped off. The IT modules show that his attendance is low but he has good marks in assignment up to January. [Note that the vertical scale represents this student’s engagement as a percentage of the average for the cohort – thus 150% online activity in October is well above average.]

The groups were then asked to develop an intervention plan consisting of:

- Triggers – what precipitates an alert/intervention

- Assumptions – what other information might you consider and assumptions made before you make an intervention

- Types e.g. reminders, questions, prompts, invitations to meet with tutor, supportive messages

- Message and behaviour change or expectation on the student – suggest possible wording for a message and anticipated effect on student behaviour

- Timing and frequency – when might this type of intervention be made, how soon to be effective

- Adoption – what will staff do differently? How will they be encouraged to change their working practices? Which roles will be involved?

- Evaluation – how will the success of the interventions be evaluated

Triggers

The groups specified a variety of triggers for interventions. Attendance at lectures and tutorials was a key one. One group considered 55% attendance to be the point at which an intervention should be triggered.

A low rating in comparison to average is likely to be a better trigger. This is the case for accessing the VLE too – overall online activity well below average for the last 3 weeks was one suggestion. A danger here is that cohorts vary – and a whole class may be unusually engaged or disengaged with their module.

Requesting an extension to an asssignment submission was thought to be a risk factor that could trigger an intervention. Low marks (40%) in an assignment could also be used.

An issue which I was acutely aware of was that ideally we should have the input of a data scientist in helping to decide what the triggers should be. An attendance rate, for example, which indicates whether a student is at risk is likely to be more accurate if it’s based on the analysis of historic data. Combining statistical input with the experience of experienced academics and other staff may be the best way to define the metrics.

Assumptions

It’s likely of course that engagement will vary across modules and programmes within an institution, where different schools or faculties will have different approaches to the importance of attendance – or even whether or not it’s compulsory. One student mentioned that some lecturers have fantastic attendance rates, while students quickly stop going to boring lectures. The fact that they’re not attending the latter doesn’t necessarily imply that they are at risk – though it might suggest that the lecturer needs to make their lectures more engaging!

Events such as half-term holidays, reading weeks etc would also need to be taken into account. Meanwhile, if Panopto recordings of lectures are being made, then student views of these might need to be considered as a substitute for physical attendance.

The use of the VLE varies dramatically too, of course, between modules. Is regular access necessary or has the student accessed the module website and printed out all the materials on day 1?

One group suggested that intervention in a particular module should consider engagement in other modules too. A common situation is that a student has high engagement with one module which has particularly demanding requirements to the detriment of their engagement in less demanding modules.

If low marks are being used as a trigger, it may be too late to intervene by the time the assessment has been marked. More frequent use of formative assessments was suggested by one group.

Another assumption mentioned was that low VLE usage and physical attendance do actually correlate with low attainment. A drop in attendance or in VLE use may not necessarily be a cause for concern. Again, analysis of historic data may be the best way to decide this.

Types of intervention

Push notifications (to a student app or the VLE), emails and/or text messages sent to the student by the relevant staff member were suggested. These might consist of an invitation to meet with a personal tutor. Some messages might best go out from the programme director (sent automatically with replies directed to a support team).

Other options were to send a written communication or to approach a student at a lecture to attempt to ensure they attend a follow-up meeting (though if they’re disengaged, you probably won’t find them in the lecture, and of course in large cohorts, lecturers may not know individual students…)

One risk that was noted was that multiple alerts could go out from different modules around the same time, swamping the student.

Ideally, one group suggested, the messages would be recorded in a case management system so that allied services would be informed and could assist where appropriate. Interventions would be viewable on a dashboard which could be accessed by careers, student services etc (with confidentiality maintained where appropriate).

Timing and frequency

A sequence of messages was suggested by one group:

- A message triggered by extension request or late submission

- If no response received from the student after a week, a follow up message expressing concern is sent

Another group suggested that an intervention would need to be taken in the first 4 weeks or it might be too late. If low marks are being used as a trigger, this would have to happen immediately after marking – waiting for subject boards etc to validate the marks would miss the opportunity for intervention.

Points during the module e.g. 25%, 50% and 75% of the way through could be used as intervention points, as happens at Purdue University.

Messages

A lot of thought is put into the best wording for messages by some institutions. One suggestion at our workshop, with the aim of opening a dialogue with the student to gauge if further support is necessary, was:

I noticed that your coursework was submitted late. I hope everything is OK. Let me know how you are doing.

Another group thought that a text message could be sent to the student, which would maximise the chance of it getting through to them. It would ask them to check an important email which has been sent to them. The email would say:

- We are contacting you personally because we care about your learning

- We would like to have a chat

- Why we would like to have a chat – concerns about attendance and online engagement, and risk that this might translate to poor performance in assessments coming up

- Make student aware of support networks in place

The use of “soft” or “human” language, which relates to the student, was thought to be important. Short, sharp, concise and supportive wording were recommended. A subject line might be: “How to pass your module” or “John, let’s meet to discuss your attendance”.

I did have a conversation with one participant at lunchtime who thought that we need to be clear and firm, as well as supportive, with students about their prospects of success. If we beat about the bush or mollycoddle them too much, they’re going to get a nasty shock when they encounter firmer performance management in the workplace.

Adoption

A couple of groups managed to get onto this part of the exercise. Numerous projects involving learning technologies have failed at this hurdle – it doesn’t matter how useful and innovative the project is if it’s not adopted effectively into the working and study practices of staff and students. What I was hoping to see in this part was more mention of staff development activities, staff incentives, communication activities etc. but most people had run out of time.

One group did however suggest defining a process to specify which staff are responsible for which interventions when certain criteria are met. A centralist approach could be less effective they thought than a personal one – so ideally a personalised message derived from a template would be sent from a personal tutor. Retention officers would be key players in the planning and operationalisation of intervention processes.

It was also thought that the analytics should be accessible by more than one member of staff e.g. the module tutor, programme director, personal tutor and retention officer in order to determine if the cause for concern is a one-off or whether something more widespread is happening across the cohort or module.

Evaluation

One of my personal mantras is that there’s no point in carrying out learning analytics if you’re not going to carry out interventions – and that there’s no point in carrying out interventions unless you’re going to evaluate their effectiveness. To this end, we asked the groups to think about how they would go about assessing whether the interventions were having an impact. Suggestions from one group included monitoring whether:

- Students are responding to interventions by meeting with their personal tutor etc as requested

- Students submit future assessments on time

- Attendance and/or online engagement improve to expected levels

Another group proposed:

- Asking students whether the intervention has made a difference to them

- Analysing the results of those who have undergone an intervention

Engagement by the participants during the morning session would no doubt be rated as “high”, with some great ideas emerging, and some valuable progress made in developing the thinking of each institution in how to plan interventions with individual students. In my next post, I’ll discuss how the afternoon session went, where we examined using learning analytics to enhance the curriculum.

3 replies on “Planning interventions with at-risk students”

Hi Niall,

Trawling for information on inititives supporting at-risk undergraduate students. Wondered if you are aware of any follow-up to the session you report upon in this post?

Thanks.

Simon

Hi Simon

We’ve held this session again a couple of times and it appeared to engage the participants and stimulate their thinking about interventions but there have been no other follow-up activities.

Cheers

Niall

I recently ran a “manual” learning analytics project across ten programmes for two years. Data on student attendance, unofficial assessments, official assessments and VLE access was accessed and maintained in a spreadsheet per programme by an intern. Regular four weekly meetings between the intern, each programme leader and their APT were held to consider what to do about students at various levels of risk. Students at critical risk were deemed to be those whose attendance and academic performance was poor on 3 or 4 out of their 4 core year-one modules. These were invited for interviews. Of the approximately 70% who attended, it was very interesting to note that for many the causes of poor performance – missed first four weeks due to visa problems, wrong course, accommodation problems, mental health issues, family issues, relationship issues and others – were various and not particularly related to either the course, or the teaching of the course. Depending on the problem, we were either able to help directly or sign post students to various university services e.g. Student Support or Well Being Suppport.