Warning: this is a fairly geeky post. I’ve attempted to make it understandable but feel free to stop reading now if you’re not interested in the technical details of the data behind learning analytics.

It is, however, really important work – a key building block for the useful analytics on students and their learning that the institutions working with Jisc will shortly be able to carry out. In order to share experiences we invited some of our pioneering university partners to join us for a “UDD Bootcamp” at Jisc’s offices in Bristol on 9th December, 2016. I arrived late after a joyous journey on England’s rail network to find Michael Webb demonstrating the Data Explorer tool (see earlier blog post).

The UDD

The main point of the day was to work together on the UDD. This is short for the Universal Data Definitions (a term coined by Rob Wyn Jones), which specify how data about students should be formatted. These are fields such as the courses they’re studying and the grades they’ve obtained, as well as demographic information.

You can view the full specification for the UDD on Jisc’s GitHub site. You can also see the entire structure there at a glance.

These data fields don’t change too often. The other key type of data that is needed for learning analytics however is details of the learning-related activities carried out by students. These come from systems such as the VLE, library management systems and attendance monitoring systems. We’re using the eXperience API (xAPI) to capture this.

All of this data is loaded into the Learning Records Warehouse (LRW), held in the cloud and hosted by Jisc on behalf of each institution.

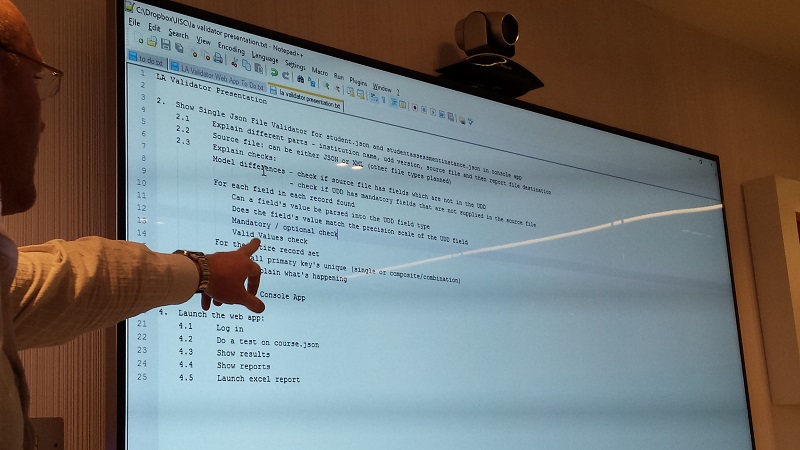

In the next session Rob discussed a validator for the UDD that we’ve developed, which assesses whether the data has been submitted in the correct format and builds in some quality checks too. UDD data can’t be loaded into the LRW unless it’s been validated by this tool.

The validator is being used primarily for loading in historic data. That’s required to develop the predictive models to be used with current students. However the same engine will be used for the near live feed. The software integrates with the GitHub site discussed above, drawing in the current data definitions on the fly to ensure that the data conforms to the specification. So there are no hard and fast rules built into the validator itself. It’ll also link to earlier versions of the UDD if required.

Assessment data

At lunchtime I chatted to Rob about assessment data. I wondered why it was being transferred via the UDD and not through xAPI: as assessment is a kind of dynamic learning activity, that seemed more logical to me. He felt that assessment data can be regarded as a kind of “slow” activity data. Summative results and grades are a formal part of a student’s learning record, held in the student information system, and may be appropriate to keep in the UDD, while perhaps more frequent, formative assessment could be seen as learning activities and therefore come via xAPI.

Later, the wider group carried on the discussion. Many of the participating institutions are using Tribal SITS as their student information system. The data models have similarities with the UDD so can be translated quite easily. But different institutional processes define at what point assessment data is transferred to the SIS. One university transfers all of its assessment data into SITS from the VLE at the last minute. That means it could be too late to do anything useful with it for predictive analytics.

Out of all this came the suggestion that we need to give guidance to institutions on why it’s important to get assessment data into the LRW as early as possible. And not just assessment data. Ultimately this might mean institutions having to change their processes in order to maximise the usefulness to them of the data they’re accumulating. We’ll try to share the emerging experience as our partners work out what works best for them.

We wondered if we need to provide more information on the elements of the UDD for institutions. Have we described the data well enough? Trying to get a common understanding of the data fields is difficult enough in one institution, depending on the person’s role or department – let alone across institutions. But there’s bound to be a lot of commonality – and people felt that the descriptions were clear enough.

More data sources

Later we looked at what other data could be useful. Module evaluation data is one area that is likely to prove informative. Reading lists are also potentially of interest, and mapping the reading requirements of the course to library records to see what students are actually accessing. The next step could be to see if there is any correlation with academic success.

Participants also discussed the usefulness and ethical aspects of using Wi-Fi records as a source of activity data.

Data on any interventions carried out was thought to be crucial. There are issues such as what happens when a tutor meets a student who is flagged to be at risk, and then decides that the student is not actually at risk. There needs to be a way of logging this and having the analytics adjusted accordingly so they don’t continue to be flagged as being at risk. Unless of course something else changes.

The intention is for Jisc to develop xAPI recipes for additional data sources such as these.

Sharing experience in big data

There’s some complex processing going on behind the scenes due to the intense requirements of dealing with hundreds of thousands of assessment records, for example. We’ve been finding that there’s not a lot of experience in institutions of dealing with such quantities of data. So this is perhaps one of the advantages of participating in Jisc’s learning analytics architecture: pooling our technical experience in issues around processing big data.

There’s also not a lot of experience in sensemaking around the data either – so working on this jointly is likely to be beneficial. One participant mentioned that there’s so much data that the most useful thing for him would be for the processing to cut it down dramatically and return him just the useful stuff.

We’re co-organising a hackathon at the LAK conference in March (link) to explore such issues further but it was suggested that we should do one in the UK as well. The idea would be to get people like librarians, tutors and hackers together to work on the data and build useful tools and visualisations. If you’re interested in that or other aspects of Jisc’s architecture and learning analytics service, you can sign up to our analytics@jiscmail list.

One reply on “Exploring issues around data for Jisc’s learning analytics architecture”

Found this really interesting and useful, thanks Naill