Last Friday, representatives from a number of early adopter universities came together at Jisc’s Bristol offices to work on some of the areas that need to be tackled in their institutional learning analytics projects.

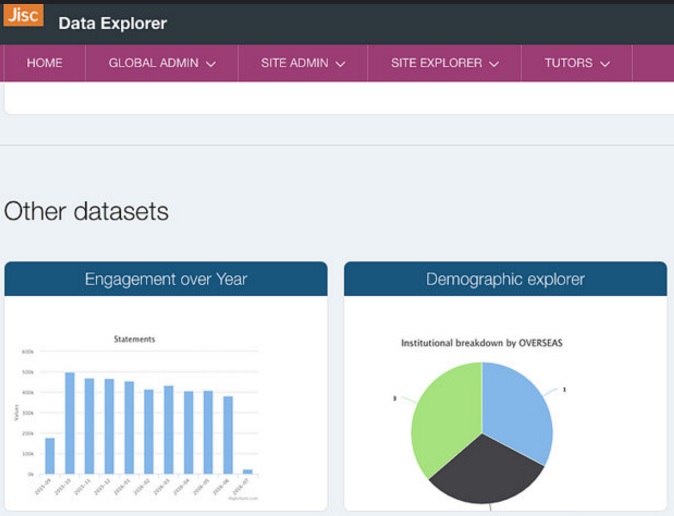

Jisc’s Director of Data and Analytics, Michael Webb, demonstrated a new Data Explorer tool which provides useful visualisations, enabling institutions to explore the data that they hold in the learning analytics warehouse. Michael led a discussion on what additional features would be most useful: the resulting suggestions and progress on their resolution are on the tool’s github site which includes a wiki with more details of the product.

Institutions will also be able to access their data via an API or integrate one of several more feature-rich commercial systems providing staff dashboards or alert and intervention systems.

I took participants through the new templates for an institutional learning analytics policy and student guide, based on our work with Gloucestershire University (see previous blog post), and received some valuable feedback.

In the afternoon, participants took part in three different discussion groups, centred around (1) culture, (2) ethical & legal issues and (3) data.

Culture

Paul Bailey facilitated this discussion which looked at cultural challenges and opportunities in institutions. The role of the tutor in learning analytics was seen as critical. The lack of consistency in the tutoring model across subject areas and faculties might complicate analysis of the data, for example. Tutors may fear that more information will require more interventions, extending their pastoral role and increasing their workload. A concern was also expressed that statistics would be used to drive efficiency rather than more effective support of students.

Opportunities noted by participants included the bringing together of holistic information about a student in one place. This would enable learners to access self-development opportunities more easily. Tutors would be able to identify behavioural patterns among their students which caused concern, and receive early warning information on students at risk.

Analytics would also, it was felt, help academics to examine how they use the VLE (or perhaps help them to evaluate other aspects of their teaching). There might be a requirement though to introduce more continuous assessment in order to provide more checkpoints to assess whether students are on track.

Staff buy-in was seen as a key priority. Suggestions to help achieve this included:

- Engage both staff and students in the steering group and in the change process

- Communicate effectively to stakeholders about what you are doing

- Focus on learning and teaching, and develop learning analytics champions in faculties

- Ensure the ongoing support of senior management

- Develop a policy that links with existing policies, and pay particular attention to transparency around data sources and processing

- Help students and staff to understand the data and predictions, and not to rely on them as gospel, and to understand that engagement data is only a proxy for learning

- Start with small scale pilots with interested staff, then be ready to scale up to the rest of the institution

- Ensure that learning analytics makes things easier and better than what is currently available to staff, and engage sceptics by finding metrics that can assist them

- Be clear about why analytics are being introduced and the benefits they bring, or any new accountability requirements they address

- Understand any reputational benefits to the institution of introducing learning analytics (e.g. better student support or personalisation)

- Encourage students to be the driver for learning analytics, by seeing the benefits and then expecting staff to adopt the systems

- See whether learning analytics can help meet requirements for the Teaching Excellence Framework

Ethical and legal issues

I facilitated this discussion, which covered some of the issues identified in the taxonomy of ethical, legal and logistical issues for learning analytics, and brought out some new ideas too.

Someone made the point that if you go to your doctor you might be told you have a 50% chance of survival if you carry on smoking. Why, they felt, are there objections to doing something similar in the educational environment? Well, here are some of the concerns:

There is a danger of drawing the wrong conclusions from the data, and we need to train both staff and students about what predictions mean – they’re not facts. If a student doesn’t engage with the VLE, for example, is that their fault or is it because you haven’t ensured that the VLE meets their needs?

Someone else suggested that, as long as the analytics are a catalyst for discussion around learning, if the analytics are not completely accurate that may not matter too much. A fair point, but that doesn’t excuse us from striving to produce the highest quality analytics.

Another issue pointed out is that different students need to be treated differently. One might need to be handheld and encouraged sensitively, the next might need “a kick up the backside”. In fact one participant reported having students coming to her and asking specifically for such treatment (metaphorically) to get them back on track!

There is also the potential problem of unfair categorisation e.g. if the analytics show that students from a particular nationality are more likely to succeed academically than those from another country, could a tutor potentially treat someone from one of the countries unfavourably? A concern, too, is that personal tutors have varying levels of emotional intelligence, and some will be better at tailoring their interventions to individual students than others.

We also had an interesting discussion about whether we should be using data such a learner’s socio-economic group in the analytics. The consensus, I think, was that we shouldn’t be squeamish about using any of the data (assuming relevant legal requirements are adhered to). If you are from a low income group, for example, and this combined with your engagement and grade data puts you at high risk then we should be considering this data in attempting to maximise your chances of success.

Passing any data outside the institution, e.g. to employers, should only be done, it was agreed, with the consent of the student. This is a legal requirement in Europe, in any case. Meanwhile, there was a consensus that learning analytics should not be used for recruitment. One scenario is that institutions would reject applicants if they didn’t meet the profile of a student who is likely to succeed. Of course this already happens to some extent through entrance qualifications and applicants’ personal statements. However to disadvantage a student at the recruitment stage because of a low socio-economic group, for example, would be unethical.

Data

This discussion, facilitated by Rob Wyn Jones, covered various issues relating to assessment data:

- There was concern that Turnitin does not enable the due date for an assignment or the grade – both key indicators – to be returned via the API for use in analytics. Assignments submitted through Blackboard do enable this.

- It was decided that a submission date field needs to be added to STUDENT_ON_ASSESSMENT_INSTANCE in the Universal Data Definition (UDD)

- Degrees of lateness needs to be captured too e.g. is the assignment late with mitigation, late with permission or granted extension?

- Could Turnitin or Blackboard’s SafeAssign (both plagiarism detection systems) provide similarity indexes or a plagiarism score, which could then be held within the UDD for use in predictive modelling and descriptive analytics?

It was suggested that the functionality of Jisc’s student app, now known as Study Goal, should also be provided through a web browser. Also APIs are required to enable institutions to extract data from the learning records warehouse into their own web apps or for analysis with systems such as Tableau or HADOOP. Additional data sources suggested include links to the National Student Survey.

A UDD validation tool will be provided, and this will use the same engine to assess historic data and live data. A roadmap for Jisc’s learning analytics products will be published in due course. For anyone wanting further details on data and technical issues, stay tuned for my next post on the following week’s meeting of techies in Bristol.